How Back-end Service Read Data From Front Enf

In this article, I desire to quickly show y'all how websites collect data. It's important to know this for two reasons:

- If you are a website visitor, it's good to know what sort of data tin be nerveless from you.

- If you lot are a website owner, it's adept to know what sort of information you can collect from your visitors.

Now, I take to add that, in this commodity, I won't become into the legal and upstanding aspects of these questions. I leave these to you and your legal professional person. I'll only testify you what'south possible with today's applied science and what things are done already by nearly websites.

Note: this article is available in video format, likewise: here.

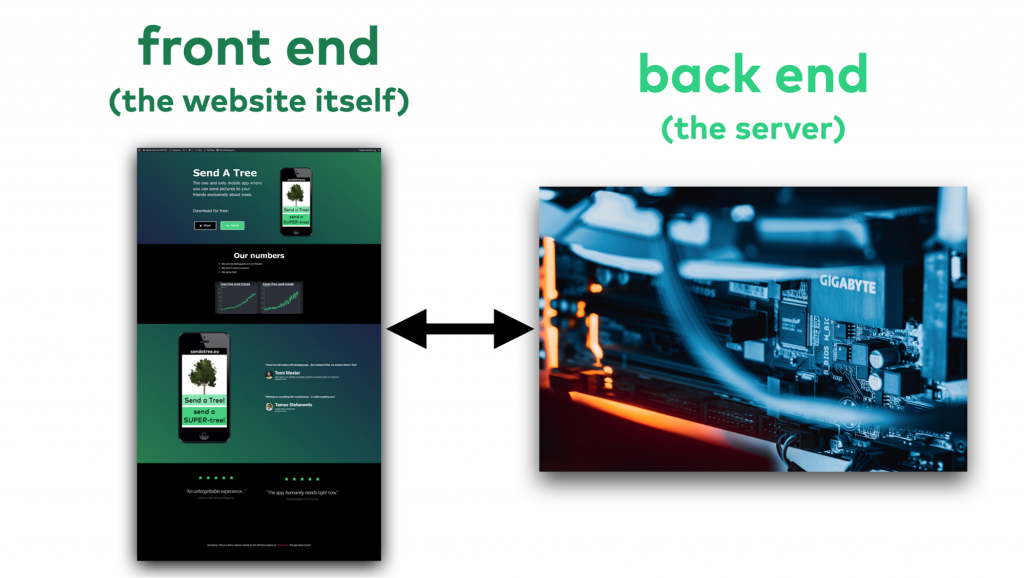

Dorsum Cease and Front end

When it comes to a website, the data collection tin happen in ii major places: on the forepart stop and on the dorsum end.

The front end terminate is basically the website that the visitor sees in her browser. There, different scripts (mostly written in JavaScript) can pick up and send the data points to a data server.

And the back end is the server that serves your website. Information technology's practically invisible to your visitors, only it'southward in that location, and it collects the information, as well — even if the website'south possessor doesn't know about it. (About of them don't.)

Front End Information Collection (with JavaScript)

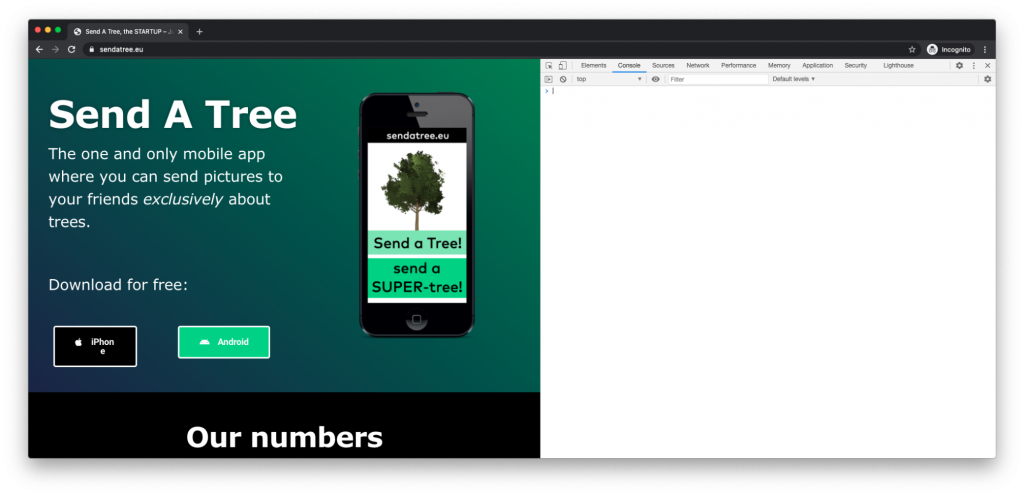

To make this easy to understand, I've created a demo website that yous tin apply to try out the unlike data collection methods I'll show you!

LINK: sendatree.european union

Everything that we'll practise on this website can be done on 99.99% of other websites on the cyberspace.

And then I encourage you to practice this with me:

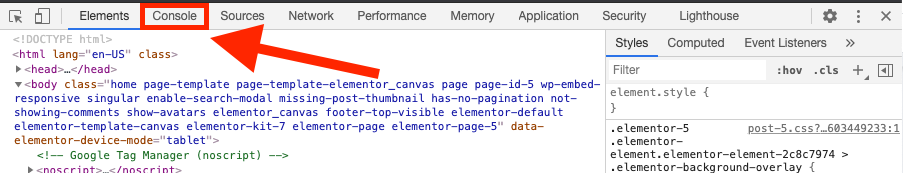

- Open up a Google Chrome browser and a new incognito window.

- Go to sendatree.eu.

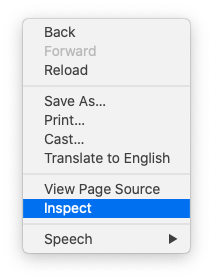

3. Right click on the web page and choose "Inspect"

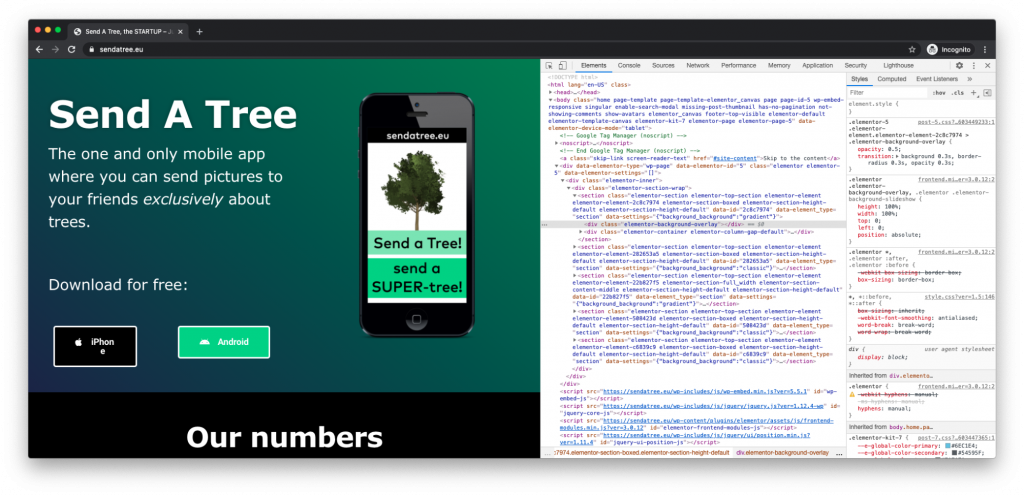

- You'll encounter the whole code base of the website on the right side of your browser:

- Choose "Panel" on the elevation menu:

6. Here, instead of sendatree.eu's HTML code, you'll meet a console where you tin can run JavaScript code.

And this is actually important:

Every single JavaScript code that we run here, can be run automatically past the website itself.

In other words, every data point that you will mine about your own website visit session in this tutorial — could exist collected by the website (and can be collected by all other websites) automatically about all its visitors, as well, and sent to a data server to be analyzed.

I'll show y'all how, and I'll focus, of class, on the pop data drove techniques that most websites utilise nowadays.

Querying and collecting company data using JavaScript

document.location

The first and near obvious thing y'all can get from the console is the information about the web page itself.

Blazon this into the console:

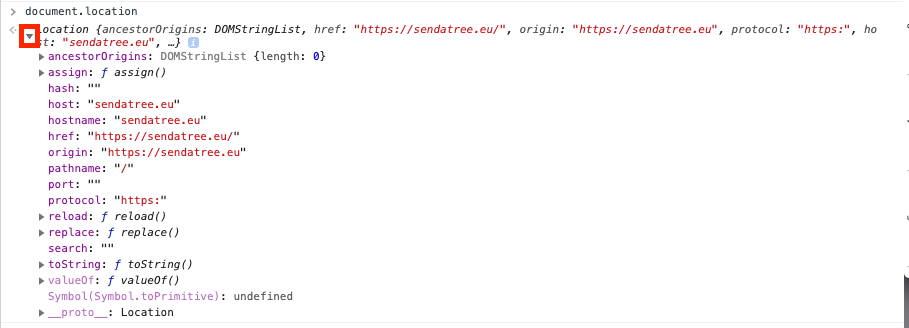

certificate.location

This will return a JavaScript Location object with important datapoints about the web page:

Location {ancestorOrigins: DOMStringList, href: "https://sendatree.eu/", origin: "https://sendatree.eu", protocol: "https:", host: "sendatree.eu", …} Y'all can go fifty-fifty deeper by clicking the little arrow on the left side:

Or you lot tin simply query the verbal information point you are interested in, by typing, for example:

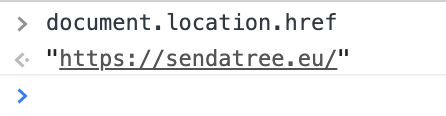

document.location.href

which will return only i affair: the entire URL of the web folio:

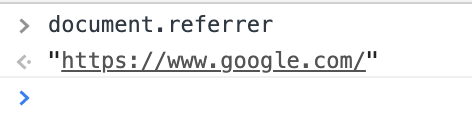

document.referrer

certificate.referrer

This is another often-used parameter that tin be collected. It shows the website the user clicked through from. Information technology'll be empty for us, considering we direct typed in the sendatree.eu URL. But if y'all Google "sendatree.eu":

And then click through from in that location, and then blazon document.referrer in the console. You'll run into that now it returns google.com:

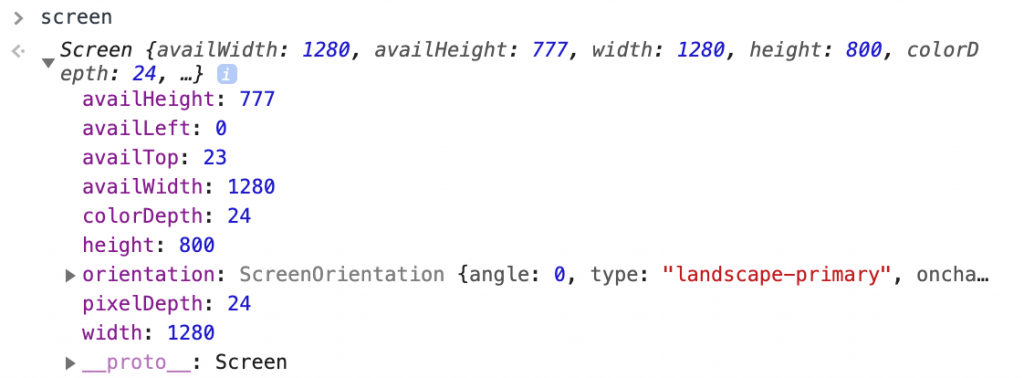

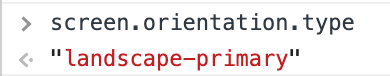

screen

screen

Information about visitors' screen settings tin be nerveless, too. And it's non just the resolution of the screen:

But for instance with the screen.orientation.blazon javascript snippet, one can get the default orientation of the user'due south screen… Which tin can be an indication of whether the user is on mobile or desktop.

navigator.userAgent

Since we are talking about mobile vs. desktop, it's interesting to take a await at the:

navigator.userAgent

property, too.

This volition return something like this:

"Mozilla/5.0 (Macintosh; Intel Mac Os 10 10_15_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36"

Equally you lot tin can come across, it's by and large nigh the browser the company uses. Simply you can also find the operating organization of her computer (or in this instance mine)… which tin can be an fifty-fifty more straight indication of whether she's on mobile or desktop.

You'll find many more things in navigator if you go deeper.

Merely let me just highlight another frequently collected piece of data:

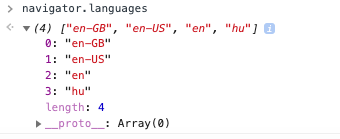

navigator.languages

navigator.languages

This shows the preferred languages on the computer of the visitor – prioritized:

Permit's talk about cookies, as well!

One of the nearly discussed aspects of data collection is cookies.

Every bit you can see, most websites tin can collect a bunch of data without cookies, also. Just I have to admit that cookies complicate things even more — because they stay in the visitors' browser even subsequently they close the website.

That means that if you visit a website today — and so one week later visit it again from the aforementioned computer and from the aforementioned browser… with the help of cookies, the website will know that yous are the same visitor.

Let's run across how this works!

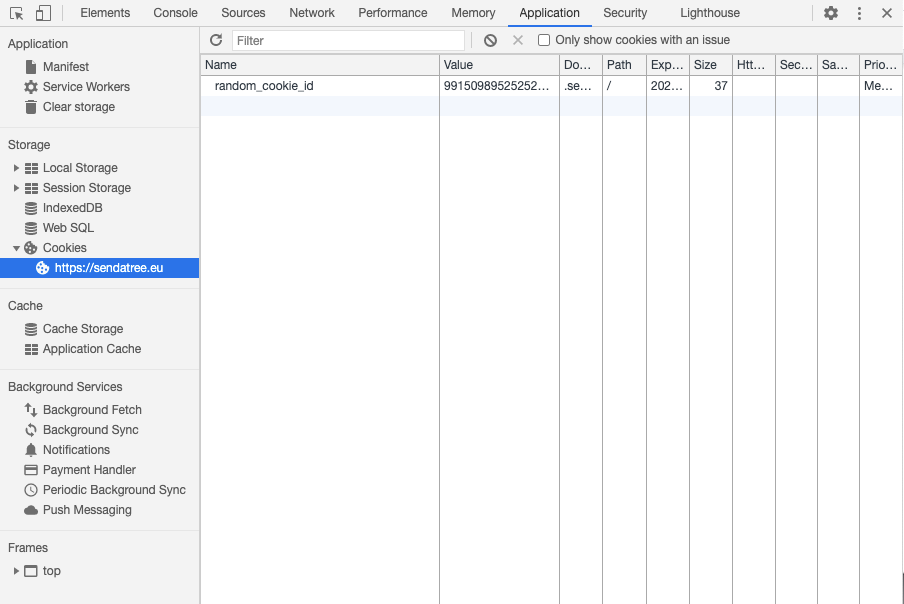

On the same top menu where you selected Panel, now go to Awarding. And then select the Cookies menu on the left bar. Within that, select https://sendatree.eu.

Yous can do this for any website, and you'll see a listing of all the cookies that the website uses to track its users.

For this example spider web page, you'll run into only one cookie (random_cookie_id) and I set it but to demonstrate how information technology works. This random_cookie_id does exactly what I said that cookies do. Information technology saves a cookie with a random variable into your browser that will exist used to "flag" that you are the aforementioned visitor when you lot come back and visit this website next week.

It has a size, an expiration date and very importantly, it tin can't be used beyond different domains. So if you visit some other website, like data36.com, the cookie that's created by sendatree.com, naturally, won't be seen by data36.com.

I won't get into detail about how this cookie is gear up. (Information technology'south a few lines of code.)

But I'll show you how you – and of course a website – tin query it with JavaScript.

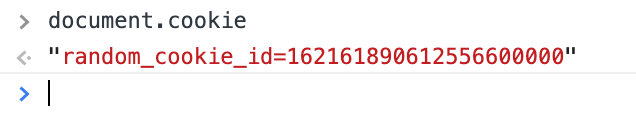

document.cookie

Simply go back to console and blazon:

certificate.cookie

And there are all the cookies that are used past the given website in the browser.

This time, it'southward merely one cookie.

But when y'all visit a real website, you'll find many many more — that all shop unlike pieces of information. Just go to your favorite e-commerce site and I bet that you'll meet at least a few of these commonly used cookies:

- Google Analytics cookies (usually chosen

_ga,_gid) - Facebook cookies (

_fbp) - Twitter cookies (

twid) - and probably many more than…

You might know that these cookies are set past tracking scripts that the owner of the website placed in that location mainly for business purposes: like measuring the website traffic (Google Analytics), running remarketing campaigns (Facebook, Twitter, etc.) and so on. When these marketing-related JavaScript snippets run, they send all the information that I showed yous (and more) to these large companies, also.

Note: Generally speaking, this is not dangerous… But you know, while the information that the website owners can collect from yous is mostly anonymous, information technology isn't e'er — when it goes to Facebook, for instance, it tin be tied straight to your Facebook profile (but only if you are logged in on Facebook while browsing). So that'southward the method these big companies use to collect so much data from you lot, fifty-fifty on a non-Facebook, non-Google, non-Twitter website.

Is information technology proficient or bad? I don't know — simply it's skillful to know how it works, for sure.

How the data goes to a data server (e.g. JavaScript + variables + XMLHttpRequest)

As I said, any JavaScript code implemented to a website can query the same data and website usage information about all users — that yous have queried in Panel nearly yourself.

How?

There are multiple means to get this done. Merely here's a uncomplicated case.

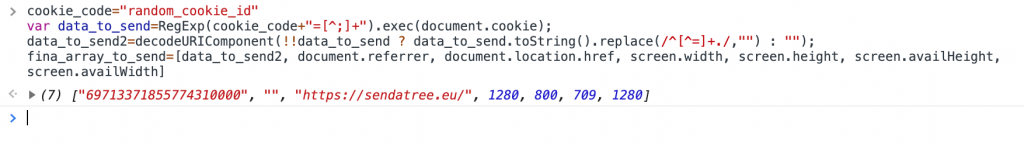

These few lines will store a few important data points into a JavaScript array:

cookie_code="random_cookie_id"; var data_to_send=RegExp(cookie_code+"=[^;]+").exec(certificate.cookie); data_to_send2=decodeURIComponent(!!data_to_send ? data_to_send.toString().replace(/^[^=]+./,"") : ""); final_array_to_send=[data_to_send2, certificate.referrer, document.location.href, screen.width, screen.height, screen.availHeight, screen.availWidth]

So we collected:

- a unique cookie id to "flag" the visitor

- the referrer (where the visitor came from)

- the webpage she visited (full URL)

- and a few more things nearly the resolution of the screen

(Of course, this list can be much longer, if you add everything that I showed yous above.)

It's all pushed into an array called: final_array_to_send.

And it only needs one final bear upon: an XMLHttpRequest.

Something like this:

var h36 = new XMLHttpRequest(); h36.open("Postal service", data_server_IP, true); h36.setRequestHeader('User-Type', 'none'); h36.setRequestHeader('Content-type', 'application/x-world wide web-form-urlencoded'); h36.send(final_array_to_send); (Note: once again, don't worry too much about fully agreement this. I just put it here, then you can run into that it's really merely a few lines of JavaScript and aught more.)

This XMLHttpRequest sends the data in this array to a information server (note: usually not the same server that serves the website) where it tin can be stored and analyzed.

Now this is only one line of code on a server… but this process tin can be done for every visited webpage, for every click, for every typed character, for every whorl event — fifty-fifty for every mouse movement. Merely the sky's the limit. And as I said, this tin be scaled to all website visitors… So adding up all these, I hope you get how a single website can produce millions of data points.

Note: In practice, to make this actually happen, you'll take to write a script on the front (for querying and collecting the data and initiating this XMLHttpRequest ) — and another script on a data server's back end that receives this data and also stores it into a file or an SQL database. I won't get into that at present, only might get back to that in another tutorial.

Dorsum End Data Drove (apache2 log)

Collecting data on the front end is pretty standard. As I said, every major online service does that on many websites: Google Analytics, Facebook, Twitter, Hotjar, etc. And quite a few website owners collect their own data, too.

But that's non all! When someone visits a website, the server that serves this website also stores data well-nigh this outcome. The funny affair is that most website owners don't fifty-fifty know that they have this detailed data log… It'southward 100% automatic and it'south how the internet works.

Just like when you go into a grocery shop and ask a worker whether they have this or that product — she'll see yous and when she sees y'all again, she'll know that you were looking for this or that production before.

When you visit a website and "inquire the server" to render it in your browser, the server sees your IP address and it will also know which spider web page you lot wanted to see. There'due south no way around it. I mean, it tin can exist masked or hidden from humans but this data past default is generated and stored on almost every spider web server that servers websites on the net today.

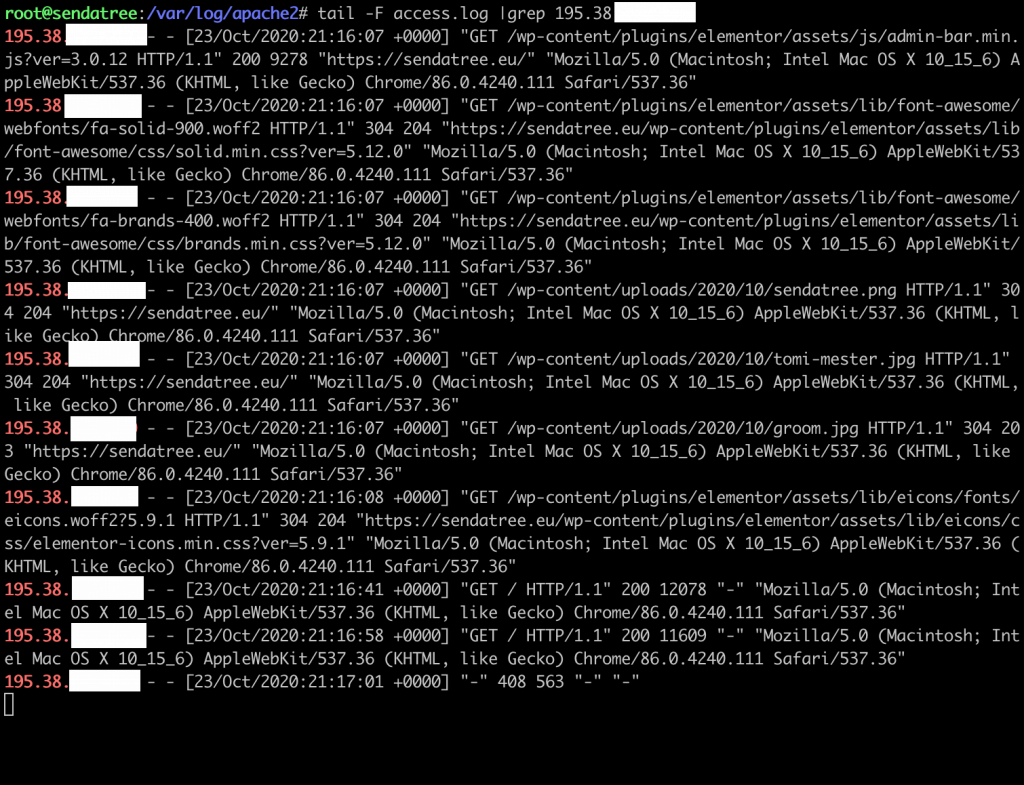

If the website runs using WordPress, for instance, like sendatree.european union does, and so this volition be stored in an apache2 log.

If you take a website and you know how to use the control line, you can log in and discover this log, too.

Here is the data that'south generated about me for a unmarried web folio visit on sendatree.eu.

This data is collected regardless of what sort of scripts the website runs or doesn't run on the forepart. Information technology'southward but a plain log of what requests were made by visitors to the server. (To make this clear: this information drove works even if a user blocks JavaScript on the front end end.)

As you can see, this doesn't incorporate a unique id, simply information technology does contain an IP address, for instance. (It'south masked for me, sorry. : ))

Also, with a few tweaks, this can exist combined with the front end stop data that I showed you lot earlier. And then back end and front end data can draw a very complex film of a website company.

Limitations

As I said, I don't want to talk about legal aspects here. I exit that to yous and your legal professionals.

I just wanted to evidence you that technology and the availability of the data is non a limitation. You don't have to be a huge internet company (Facebook, Amazon, Google, etc.) to be able to collect millions or even billions of data points of your website visitors.

But I encourage you to retrieve nigh doing this ethically. As a rule of thumb, I recommend not collecting and using data about your users or visitors that you wouldn't want to be collected about y'all.

A uncomplicated example:

If a website visitor blocks JavaScript because she doesn't want to be monitored on the front cease, probably it's non very upstanding to utilise her back end data in your data scientific discipline projects (which – every bit I showed you – will be collected regardless).

Determination

Okay, I hope that this article helped you to see and empathise ameliorate how data collection works on websites. Whether y'all are a website visitor or an owner, it'southward expert to know how these things work. I know, I didn't go also much into the tech details, but if you want to hear more about it in a next episode, just let me know in the comments!

- If you want to learn more well-nigh how to go a data scientist, take my fifty-minute video grade: How to Become a Data Scientist. (It's gratuitous!)

- Also check out my 6-calendar week online form: The Inferior Data Scientist'southward Starting time Month video class.

Thanks,

Tomi Mester

Recommended resources:

- https://developer.mozilla.org/en-Usa/docs/Web/API/XMLHttpRequest

- https://httpd.apache.org/docs/2.4/logs.html

- https://world wide web.w3schools.com/js/js_cookies.asp

Source: https://data36.com/data-collection-websites-javascript-front-end-apache2-back-end/

Belum ada Komentar untuk "How Back-end Service Read Data From Front Enf"

Posting Komentar